Step 4

By Vinzenz Unger and Anchi Cheng

Combination of projection data from different images

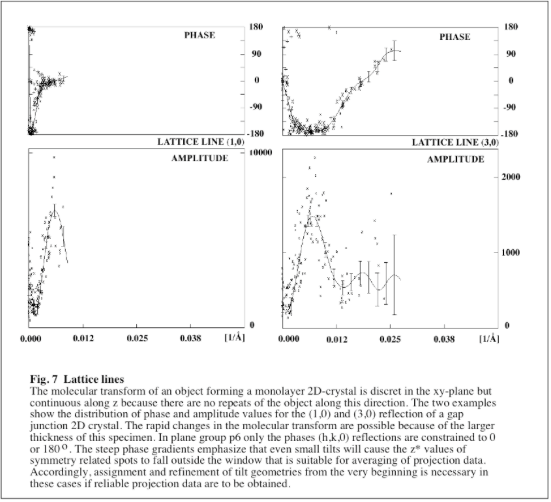

Projection data describe an untilted view of the whole structure projected onto a single section and are comprised of a set of unique reflections with indices (h,k,0). Despite the very limited amount of reciprocal space that needs to be sampled in projection, the data from several images need to be combined because not all structural details are preserved in every electron micrograph. Exactly how many images are required will depend on the quality of the specimen and the symmetry. Generally, the higher the symmetry and the better the specimen the fewer images will be required. A second important aspect is the thickness of the specimen. A thick specimen will always require more images than a thinner specimen because its molecular transform can change much faster, i.e. more measurements are needed to determine the transform values with sufficient reliability. For instance, table 1 above indicates that the phase constraints for 6-fold symmetry in images of frozen hydrated crystals of gap junction channels break down even at very low tilts. An explanation for this behavior can be found by looking at the three-dimensional transform (Fig. 7).

The (1,0) and (3,0) lattice lines are good examples showing how fast the transform of this specimen can change, i.e. how quickly phase constraints are abolished (note: only the phases of (h,k,0) reflections are constrained in p6). In cases like this it can be very difficult to obtain enough images of truly untilted crystals and hence part of the projection data will have to be generated by including data from crystals at low tilts. Luckily, many specimens are likely to be thinner and hence are less complicated. However, regardless of the specimen characteristics, aiming for an average 5-10-fold redundancy in the data structure is good policy, because it will help in the data refinement procedures and give sound statistics for the determination of the phases.

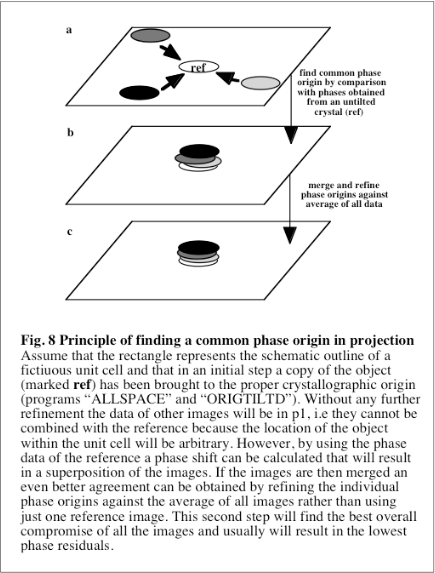

The basic principal involved in combining data from several images is to find their common phase origin, i.e. the location within a generalized unit cell of given symmetry where the phases of different images adopt “identical” values for “identical” positions in the molecular transform. A simplified view of the procedure is given in Fig.8 and an example is shown in table 2.

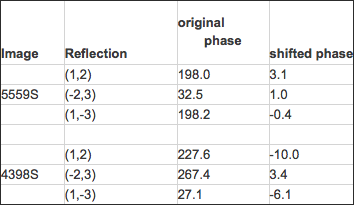

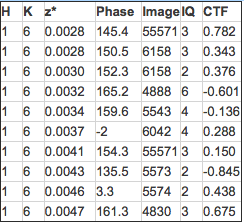

Table 2 Effect of phase shift to common origin

Image 5559S was first brought to the p6 origin before serving as reference to determine the phase shift necessary to align image 4398S. After shifting both images onto the sixfold axis of symmetry the phases of symmetry related reflections agree with each other and are close to one of the expected centre-symmetric phase values (0/180˚). In praxis the following steps have to be carried out to create a set of projection data

• Identify images of truly untilted crystals (program: ALLSPACE). If an image fails to show the expected symmetry the crystal may have been tilted enough to abolish phase constraints. In this case the tilt geometry needs to be determined (see ….) if the image is to be included at this stage.

• Choose the best image as reference to start the merging process. Criteria to make this decision are: overall resolution, overall phase residual for actual symmetry, theoretical phase residual for comparison 0 vs. 180˚ (where applicable) and the fall-off of the image derived amplitudes (ratio of average amplitude at highest resolution vs. amplitude at <15Å resolution).

• Bring the phases of the reference image to the appropriate crystallographic phase origin provided by ALLSPACE. To do this “merge” the image data against a “dummy-file” that only contains an identifier number (program: ORIGTILTD, NPROG=0).

• Use the resulting data list to determine the phase shifts that will superimpose the remaining images of untilted crystals with the reference image (see annotations for program: ORIGTILTD, NPROG=1). If explicit tilt parameters are used a z* window must be specified within reflections will be averaged for calculating a reference phase value. Usually a cut-off equal or smaller than a value of [1/2x estimated specimen thickness] is sufficient. While the influence of small tilts will be almost negligible for thin specimens a careful analysis is necessary for thick specimens. In these cases projection data will be very noisy if no correction is made even for small specimen tilts.

• Merge the images with the reference data list and re-refine all phase origins against the average from all images.

Note that phase measurements even from a single image will still show some spread (see Table 2) at the end of all refinements. This variation represents noise and consequently the superposition of different images will never be absolutely perfect. It should be mentioned that any refinement of image parameters like phase origin, amount of underfocus and astigmatism as well as refinement of the tilt geometry (where applicable) primarily aim at making the data at hand more self-consistent. This does not necessarily mean that the data become more “correct”. It is the responsibility of the operator to carefully evaluate the outcome of any step at this stage. The following guidelines can help to avoid potential problems

• Do not use the nominal values of underfocus and tilt at which the image was recorded. Instead, get more reliable starting estimates for both parameters from the calculated transform. This is extremely important when dealing with a thick specimen.

• Never add images one-by-one because this can bias data and may make their correction very difficult. Always add images in groups (i.e. at least 3-4 images at a time if some reference data are already available). Use the amount of symmetry and specimen thickness to judge how many images should be added at a time. The higher the symmetry and the thinner the specimen the smaller the number of images needed. These points are particularly important for building up a three-dimensional data set de novo.

• Use data to ~15Å only to determine the initial phase origin in order to minimize the impact of errors in the starting estimates for underfocus, astigmatism and tilt geometry. For this calculation, use only measurements that have a signal-to-noise ratio of at least 1.6 (IQ1-5).

• Use the low resolution origins to merge image data to a higher resolution cut-off, e.g. 7Å. After merging, recalculate the origins using the average of all data as reference. In this calculation, include all of the more significant data (IQ1-5, or IQ1-3) up to the new resolution cut-off and check that the origins remain stable (see annotations for program ORIGTILTD). Larger movements of an origin under these circumstances are a good indication that the assigned underfocus is incorrect.

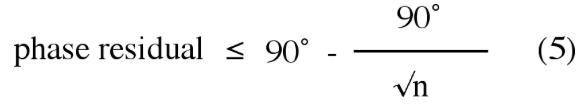

• Stop adding more data and investigate if the phase residuals obtained in foresaid calculation start to degrade in the higher resolution bands. This break down usually indicates that the starting estimates for the underfocus values are incorrect for several images or (mainly for thick specimens) that the tilt geometry is incorrect. The following relation can be used to estimate if a phase residual reflects meaningful data

• In this formula “n” represents the number of spots in a given resolution range (note that this estimate will only be meaningful if it is applied to a small number of spots!). For instance, if 25 spots were used to calculate the phase residual in a given resolution band any phase residual larger than 72˚ would indicate that the “data” are in fact random noise. If the band only contained 9 spots this cut-off would be 60˚. In praxis the actual residual should be significantly lower than the upper limit. If in doubt, calculate the residuals for different “IQ-cutoffs”, i.e. use spots with different signal-to-noise ratios.

The last point is very important because addition of more images will not improve the situation unless it was guaranteed that their underfocus values and tilt geometries were correct. Phase residuals are a very reliable indicator for the quality and self-consistency of the data. Consequently, whenever the residuals start to degrade a refinement step should be included to avoid randomizing the data.

Data Refinement

In projection, adjustment of the CTF is likely to be the major data refinement step. However, a full refinement of all parameter must be performed if data from images of tilted crystals are to be included (see …). Furthermore, at better than 5Å resolution image data have to be corrected for the influence of beam tilt. This procedure accounts for the fact that the electron beam does not always illuminate the area of interest in a perfectly perpendicular direction. A more detailed explanation for this particular refinement can be found in REF.

There are two fundamentally different approaches to refine the CTF. In the first approach the defocus values of images that display bad phase statistics are systematically changed along one direction (run CTFAPPLY with different input for the defocus) and the phase residual is “plotted” as a function of the change. If the defocus was assigned incorrectly along this direction the phase residual will drop once the error has been compensated and the new amount of defocus is fixed. In a second step, the defocus is changed in the direction perpendicular to the direction of the first step and again, the phase residual will indicate if there was a mistake in the original assignment. Finally, if the corrected defocus settings along each direction are different, i.e. if the image is astigmatic a third pass of corrections is carried out in which the orientation of the CTF is varied. Eventually the very best set of parameters, resulting in the lowest phase residual is chosen at this stage. After this procedure has been repeated for all images the data are remerged and should display much better agreement. If the phase statistics are still bad for some images the whole procedure is carried out again but using the already improved data as reference. The strongest argument for this approach is its total lack of bias because all decisions are purely based on phase residuals. However, if not automated this method is quite time consuming. Furthermore, this approach requires the starting estimates to be sufficiently close to the final values because only a limited number of different settings can be reasonably tried. A second requirement is that the starting phase residuals need to be significantly lower than the upper limits resulting from equation (5) because otherwise the average phase values calculated from all available reference data will not impose any constraints that are strong enough to move the data into the right direction. The alternative approach is to assess the data manually, i.e.

• Search a list of merged data for reflections that would agree better with the other data if 180˚ were added to their phases. Often but not always this will be the case for measurements that are close to a zero node in the CTF (the absolute value of the CTF is listed in the last column of the ORIGTILTD output). The following table illustrates the underlying idea

Table 3 Defocus refinement

The z* values indicate that the measurements are from images of tilted crystals. However, the underlying principle to identify errors in the defocus is the same as for projection data. Note that a total of 8 different images contribute data to this part of the transform. The redundancy allows to unambiguously identifying the bolded measurements from images 6042 and 5574 as being wrong. To correct the mistake the defocus of these images needs to be changed so that the absolute value of the CTF given in the last column adopts a negative value. In praxis this moves the reflections through a zero node onto a neighboring band of the modulation. As a consequence the phase values will change by 180˚ bringing both measurements much closer to the other data.

• Make a list containing all measurements that could potentially be corrected by refining the defocus values of the affected images.

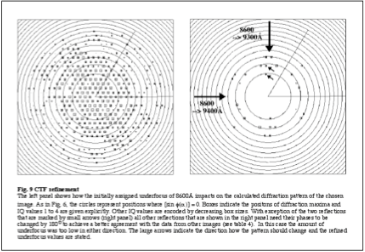

• For each image, make a separate copy of the plot obtained from CTFAPPLY and note any special settings such as “rev (h,k)” that were used in ORIGTILTD (see annotations for ORIGTILTD). Mark all reflections that ideally should be changed and indicate the direction in which the Thon ring pattern should move (outwards: lower the underfocus; inwards: increase underfocus). Usually a clear trend can be observed if the original defocus assignment was incorrect. An example is given in Fig.9 and the effect of the refinement is illustrated in Table 4. Try to include as many of the corrections as possible. If not all changes can be made try to correct spots with better IQ values first, because they have greater weight in the data averaging. If the desirable changes within an image contradict each other leave the underfocus unchanged.

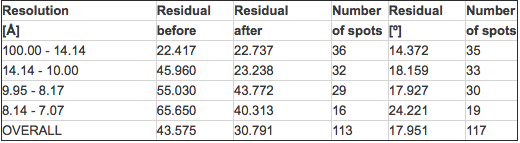

Table 4 Effect of CTF refinement on phase statistics

Phase residuals for table 4 were calculated before and after the CTF refinement using the same uncorrected data set as reference. A further improvement would be expected after including the corrections for all images into the reference data set. To support this argument and to put the numbers into perspective another set of residuals was calculated at a later stage of the analysis. The tremendous improvement can easily be recognized. The high phase residuals at the beginning of the refinement process are mainly caused by the additive effects of small errors emphasizing that every effort should be made to obtain good starting estimates for underfocus, astigmatism and where applicable the tilt geometry.

The advantage of this approach is that the operator will know about changes to come and hence can take these changes into account while evaluating the body of data. Furthermore, by reevaluating certain data constellations several times from the perspective of different images usually will reinforce decisions made for other images if there is a “true” solution. The danger in this method is that it can bias the data if the operator’s decisions are wrong. The most likely situation for this to happen is the refinement of a large data set in which the majority of images require significant changes. In this case it can be difficult to maintain the overview necessary to make decisions. A partial solution to this problem is to identify those images where the required changes are unambiguous and in a single step, to include all these changes into the data set before moving on to cases that are less clear. Like for the first method, refinements must not be included on a “one-image-at-a time” basis unless the changes are unquestionable because the data can easily become biased especially when dealing with a three-dimensional data set. Defocus refinements of a whole data set are likely to take several days and a “gradual” change of the data set will eliminate the “controversial” data constellations that are necessary to decide what changes are likely to be correct. However, during the process the volume of decisions to remember can become too large. In these cases changes may be included in groups once there is enough reason to assume that they will move the data in the right direction.

Data averaging and generation of projection density maps

After refinement of the image parameters averaged projection data can be obtained by the following procedure

• Determine the individual resolution cut-off for each image because not all images may have reliable data to the highest resolution.

• To do this, merge all images to the highest resolution and calculate phase statistics in resolution bands (program: ORIGTILTD, NPROG=0 and 1 respectively) using an IQ5 cut-off. For each image determine the best resolution band for which the phase residuals are still reliable (use relation (5) for estimates). If in doubt, repeat the calculation of the phase statistics using an IQ3 cut-off. If the statistics do not improve significantly for the resolution band(s) in question, truncate the image at a lower, reliable resolution. If however the residuals are reliable to higher resolution under these circumstances one usually can follow this lead because the gain of having consistent IQ3 measurements at higher resolution is often larger than the decrease in the accuracy of the averaged phase values incurred by the weaker IQ4 and 5 measurements. Nevertheless, these are not strict rules and the operator has to make a final decision of what is best.

• Merge the images with the appropriate resolution cut-offs.

• Run program AVRGAMPHS to obtain averaged phase and amplitude values for each structure factor. Replace average amplitudes with averaged electron diffraction amplitudes where applicable. As for the calculation of a common phase origin the averaging step will require to decide on a z* cut-off if any tilt geometries were explicitly taken into account. Usually a value of [1/2x estimated specimen thickness] will be sufficient.

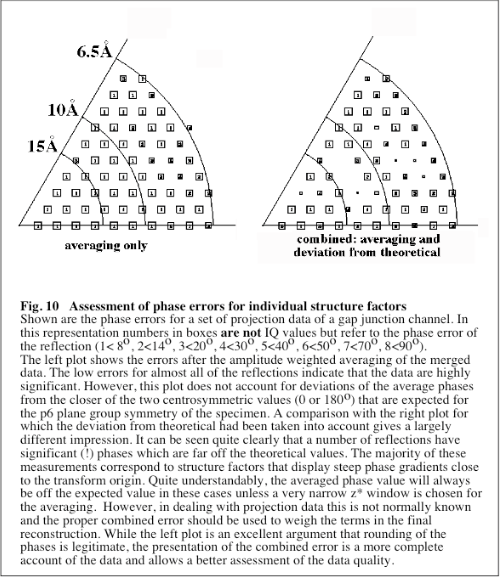

• If the plane group permits, round phases to the closer of 0 or 180˚, adjust the figure of merit obtained from AVRGAMPHS to account for the deviation of the experimental phase from the closer theoretical value and generate a visual representation of the final error for each structure factor. This plot directly shows the final weight that will be given to each structure factor and is a more objective representation of the data than a plot of the figure of merit obtained from AVRGAMPHS (see Fig.10)

• Calculate phase errors in resolution bands to evaluate quality of the averaged data.

Currently, no “standard” programs are available for performing the latter steps. However, “jiffy” routines for these purposes are easy to write or can be obtained together with annotations from our ftp site (programs: FOMSTATS and PLOTALL).

After an averaged set of structure factors has been obtained a projection density map can be calculated using standard crystallographic software like CCP4 (ref). Examples for scripts to calculate projections and 3D maps can be obtained from our ftp site. One point that should be mentioned is that the standard CCP4 conventions for the symmetry operators are not necessarily applicable for all of the two-sided plane groups. For instance, CCP4 will choose the y-axis for applying twofold symmetry in p2. However, for the two-sided plane group p2 the twofold axis is oriented along the z-direction and hence the symmetry operators need to be customized to achieve proper symmetry averaging (i.e. instead of “X,Y,Z”/”-X,Y,-Z” specify “X,Y,Z”/”-X,-Y,Z”).

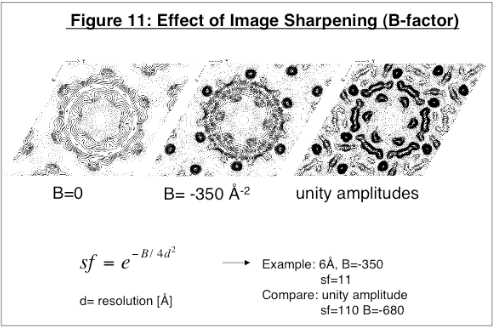

In many cases no electron diffraction data will be available. In this situation the resolution dependent fall-off of the image derived amplitudes needs to be corrected for by choosing an appropriate temperature or B-factor. The dramatic effect of boosting the amplitudes of the higher resolution terms is shown in Fig.11.

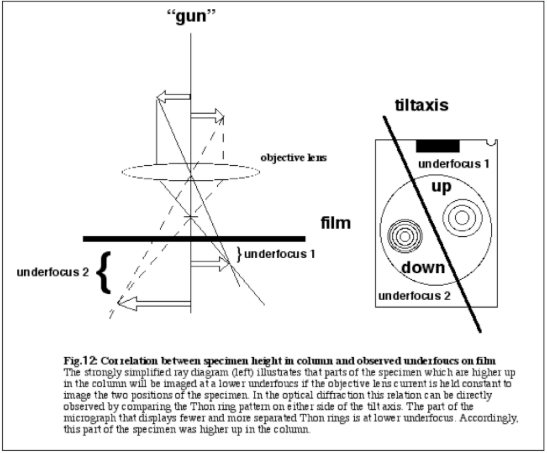

Immediate concerns are the validity of this approach and how an appropriate B-factor can be determined. An argument in favor of this approach takes into account that the contribution of the amplitudes to the appearance of any map is far less important than using inaccurate phases. As long as the phase values are well determined only a rough estimate that reflects the relative weights of the terms needs to be known. This is particularly true if the resolution is limited to an intermediate range. The example given in Fig.12 illustrates this point.

Instead of using a B-factor all amplitudes were set to unity and the only weight being used was the experimental figure of merit obtained for the accuracy of the corresponding phase. This procedure completely abolishes the measured relationships between the amplitudes. Nevertheless, the resulting map still contains all the major features of the “sharpened” map shown in Fig.11b , although a few high resolution ripples are evident that are caused by emphasizing some high resolution terms too much. The latter is not surprising since the experimental amplitudes of the higher resolution reflections are on average ~88-fold weaker in the original data which is not at all reflected after setting all amplitudes to unity. However, this artifact can be removed almost completely by restoring the relative weight of the two strongest reflections (Fig.12b). In this case the approximate ratios between the amplitudes of the three strongest reflections (5.5:4.3:1) were used as rough estimate for the relative weight because the differences between the amplitudes of these and the remaining reflections were very pronounced.

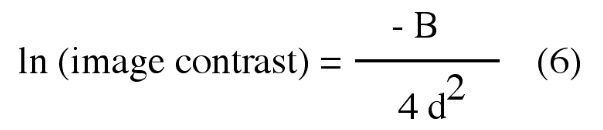

If the original image derived amplitudes are used instead of unity amplitudes the restoration of the correct relative weights can only be achieved by choosing an appropriate B-factor. The following relation provides an estimate for the B-factor that is needed for a full correction

In equation (6) ”d” denotes the resolution in [Å]. For instance, the average amplitude of all but the two strongest reflections was about 22-fold less than the amplitude of the strongest reflection in the data set used to generate Fig.11. This ratio approximates the maximal scaling factor that can reasonably be applied to the reflections with the highest resolution. If the highest resolution was 6.5Å then equation (6) gives: B= ln(22)*4*(6.5)2 = 522 Å2. Application of this B-factor increases the amplitudes of the higher resolution terms to a level that on average is about 4.9 times lower than the amplitude of the strongest reflection. This is in good agreement with the rough estimate obtained from the comparison of the three strongest amplitudes only and emphasizes again that reconstructions will not be very sensitive to inaccuracies in the amplitude values. However, two things need to be pointed out. First, enhancing high-resolution frequencies by using a B-factor will only give a reliable result if the corresponding phases are well determined. Secondly, this approach may not be useful for specimens that are ordered to near atomic resolution. In these cases the fade-out of the image derived amplitudes may not follow a single exponential which complicates the matter and makes it desirable to use amplitude values obtained from electron diffraction patterns.